July 2021

July 2021

GitHub Copilot spits out live API keys from training data, exposing credentials.

August 2021

August 2021

Researchers extract personal data from GPT‑2, proving memorization‑based data leaks.

September 2022

September 2022

First GPT‑3 prompt‑injection override reveals hidden system instructions.

January 2023

January 2023

Jailbroken ChatGPT generates malware and phishing kits, aiding cyber‑crime.

March 2023

March 2023

Meta’s restricted LLaMA weights leak online, giving unrestricted public access.

May 2023

May 2023

Samsung engineers paste source code into ChatGPT, leaking trade secrets.

November 2023

November 2023

“Repeat forever” exploit forces ChatGPT to dump memorized personal information.

January 2024

January 2024

Repeating multi‑token phrases still bypass GPT‑4 security patches and leak sensitive information.

October 2024

October 2024

Claude with Computer Use (Agentic AI) allows for “ZombAI” attack, leading to autonomous code execution.

December 2024

December 2024

Google Gemini Models are jailbreaken and system prompt leaks online upon release.

January 2025

January 2025

New open-source model DeepSeek‑R1 ranks among easiest models to prompt‑inject in security benchmarks.

May 2025

May 2025

Remote Prompt Injection in GitLab Duo (Anthropic Claude) Could Lead to Source Code Theft.

June 2025

June 2025

Zero-click AI data leak flaw uncovered in Microsoft 365 Copilot.

Today

Today

Don't let this be your next headline. Protect your AI systems from prompt injections, data leaks, adversarial attacks and stay ahead of the future threats with expert help.

Services, Reimagined.

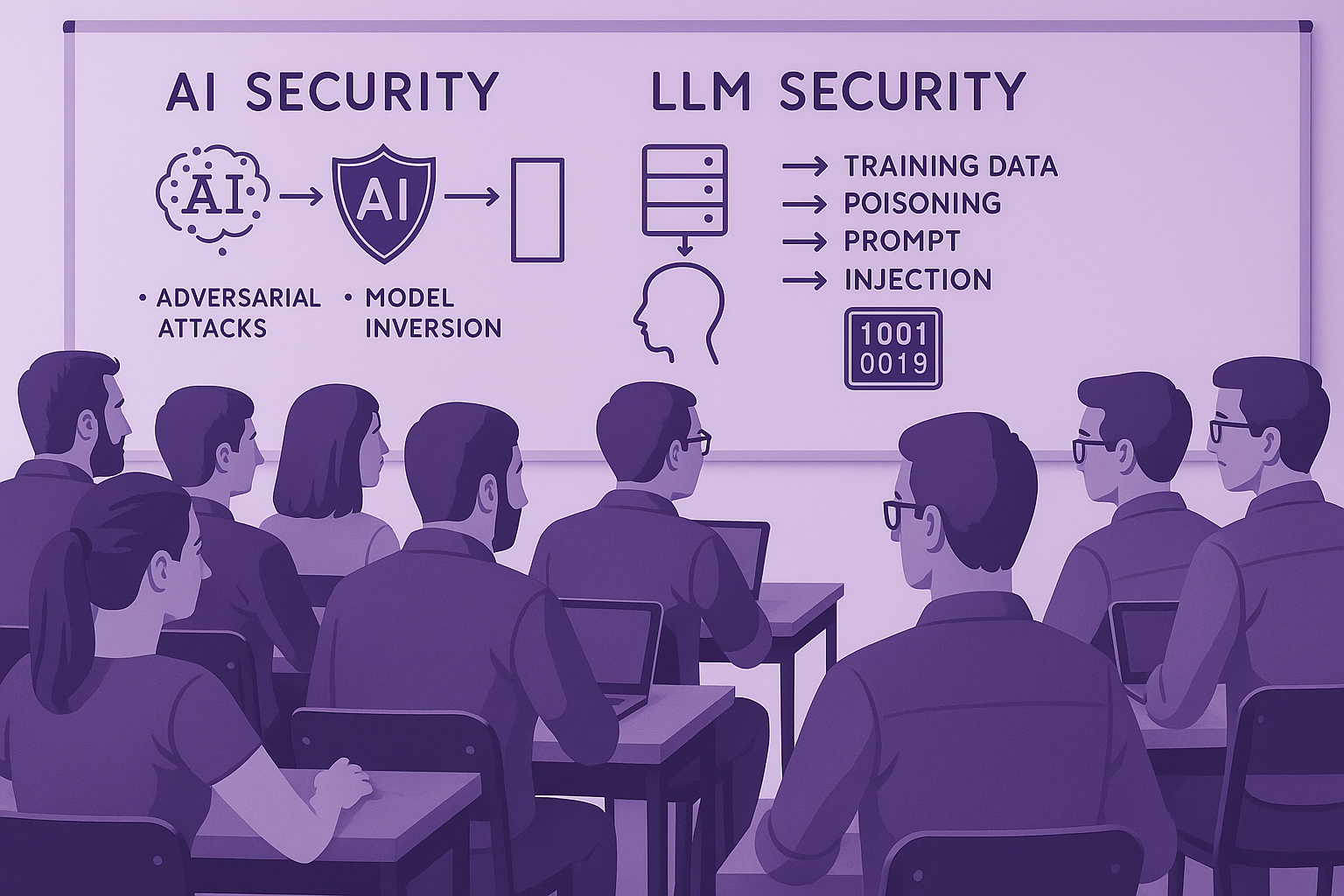

LLM Red Teaming & Penetration Testing

Simulating real-world attacks to uncover your AI system’s blind spots.

Secure AI Development Consulting

Helping teams develop AI responsibly, with security at the core.

Keynote & Conference Talks

Cutting through the hype with clear, actionable perspectives on AI and security.

LLM Security Upskilling Workshops

Equip your engineers with the skills to secure next-gen AI systems.

Ongoing AI Security Consulting

Long-term partnership to navigate the shifting landscape of AI threats.

AI Supply Chain & DevOps Pipelines Audits

Secure your AI stack—from third-party models to CI/CD pipelines.

Review of AI Architecture

Get a second set of eyes on your AI architecture to build cheaper, smarter and safer.

Command Injection possible on the Llama2 LLM model.

User input is not sanitized before entering the model.

Image‑generation filter appears to be limited.

Extra configuration might be needed on the ML cluster.

Command Injection possible on the Llama2 LLM model.

User input is not sanitized before entering the model.

Image‑generation filter appears to be limited.

Extra configuration might be needed on the ML cluster.

Command Injection possible on the Llama2 LLM model.

User input is not sanitized before entering the model.

Image‑generation filter appears to be limited.

Extra configuration might be needed on the ML cluster.

Command Injection possible on the Llama2 LLM model.

User input is not sanitized before entering the model.

Image‑generation filter appears to be limited.

Extra configuration might be needed on the ML cluster.

AI Threat Modeling & Risk Assessments

We map out risks so you can build AI that’s resilient by design.

Guides and Articles

About injectiqa

Tech

Insurance

Finance

Telecom

Startups

Security Expertise

Our team holds prestigious hacking certifications and brings years of hands-on experience in cybersecurity, ensuring deep insight into safeguarding AI systems against sophisticated threats.

Cross-industry Know-How

We combine cybersecurity expertise with proven experience across sectors such as tech, finance, and telecom, enabling us to provide tailored, effective solutions for diverse industry challenges.

Future-Proof Mindset

We are committed to operating at the forefront of technological innovation, and thus we continuously adapt and evolve our strategies to keep you and us ahead in the rapidly advancing AI landscape.

Continuous Learning Ecosystem

We aim to create a dynamic environment dedicated to constant learning and growth, ensuring our and your team stays informed, curious, and ready for emerging threats.

Swift & Adaptive

In a field where threats evolve daily, it is essential to quickly pivot strategies and adapt solutions, maintaining your defenses robust and responsive.

Knowledge Transfer

We actively share our cybersecurity expertise to empower your teams, enabling you to independently build and sustain secure, resilient AI systems.